CBC appearances

Last month I wrote about poking into the CBC’s list of public appearances made by its on-air hosts, which emerged after an interview on Jesse Brown’s Canadaland caused a ruckus. Eventually the CBC started to make available data about where its hosts appeared and whether they got paid. Out of curiosity, I decided to dig into the data a little more. Here’s what I found, and how.

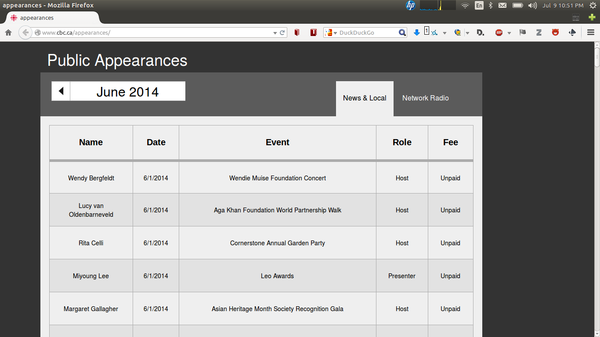

As I write this in early July, the default data showing on the appearances listing is for June. It looks like this:

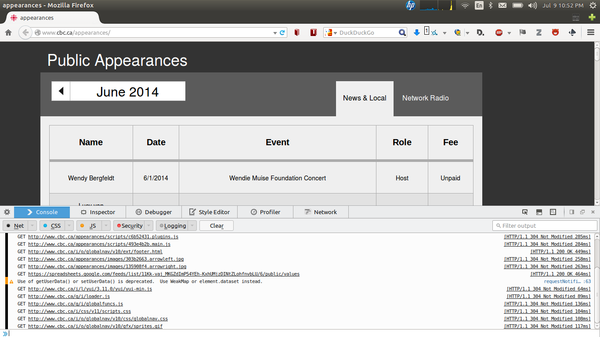

If you look at the source you won’t see all of the data in the page itself—it’s not a big table that someone entered by hand, for example. Where’s it all coming from? It’s not obvious in the page source because it’s happening in some Javascript, but if you use Firefox’s web console (Ctrl-Shift-i) to follow what’s going on when the page loads, you’ll see that along with all the stuff it’s getting from the CBC’s web site it’s also getting something from Google:

The line in question is:

GET https://spreadsheets.google.com/feeds/list/11Kk-vaj_MKGZdImP54YEh-KxhUMjzDINtZLohfnvbLU/6/public/values?alt=json

Aha, a Google spreadsheet! What’s in there if we look at it ourselves? It’s JSON, so run it through jsonlint to clean it up:

curl "https://spreadsheets.google.com/feeds/list/11Kk-vaj_MKGZdImP54YEh-KxhUMjzDINtZLohfnvbLU/6/public/values?alt=json" | jsonlint | more

Here’s the top:

{

"version": "1.0",

"encoding": "UTF-8",

"feed": {

"xmlns": "http://www.w3.org/2005/Atom",

"xmlns$openSearch": "http://a9.com/-/spec/opensearchrss/1.0/",

"xmlns$gsx": "http://schemas.google.com/spreadsheets/2006/extended",

"id": {

"$t": "https://spreadsheets.google.com/feeds/list/11Kk-vaj_MKGZdImP54YEh-KxhUMjzDINtZLohfnvbLU/6/public/values"

},

"updated": {

"$t": "2014-07-02T20:32:00.256Z"

},

"category": [

{

"scheme": "http://schemas.google.com/spreadsheets/2006",

"term": "http://schemas.google.com/spreadsheets/2006#list"

}

],

"title": {

"type": "text",

"$t": "June 2014"

},Bunch of Google stuff … let’s skip by that and look at a chunk from the middle:

"title": {

"type": "text",

"$t": "Carole MacNeil"

},

"content": {

"type": "text",

"$t": "date: 6/3/2014, event: The Panel: Debate on Economic Diplomacy, role: Moderator, fee: Unpaid"

},

"link": [

{

"rel": "self",

"type": "application/atom+xml",

"href": "https://spreadsheets.google.com/feeds/list/11Kk-vaj_MKGZdImP54YEh-KxhUMjzDINtZLohfnvbLU/6/public/values/d2mkx"

}

],

"gsx$name": {

"$t": "Carole MacNeil"

},

"gsx$date": {

"$t": "6/3/2014"

},

"gsx$event": {

"$t": "The Panel: Debate on Economic Diplomacy"

},

"gsx$role": {

"$t": "Moderator"

},

"gsx$fee": {

"$t": "Unpaid"

}Now we’re getting somewhere. There’s a name, a date, an event, a role, and a fee category. There’s the data! It’s all jumbled up and messy the way we’re seeing it, but that’s because it’s ugly JSON. Some Javascript is taking this and reformatting it into a nice table.

Going back to the CBC appearances page, notice there’s also a “Network Radio” tab in the upper right. That data is coming from another Google spreadsheet. Using the console shows this request:

GET https://spreadsheets.google.com/feeds/list/1qqXnT1--bKn2qXigFqoaH09T9vBm4dXlQNQgTjS60tE/6/public/values?alt=json

The ugly string in the middle of the URL is different.

According to the docs on Google’s spreadsheet API, when you publish a spreadsheet the URL has this format:

https://spreadsheets.google.com/feeds/list/key/worksheetId/public/basic

The ugly string in the URLs is the key and 6 is the worksheet ID. June is the sixth month. What if we change that to 5? Turns out it works: you get May’s data.

So now we know that CBC is keeping this appearance data in two Google spreadsheets, each of which has one worksheet per month, and both spreadsheets have been published to the web, which means the data is available in JSON or XML. That’s good, but it’s awkward. I wish the CBC was making the data available in a simple format. They’re not, but we can easily work around that.

I wrote a little Ruby script, cbcappearances.rb, that runs through both spreadsheets and all their monthly worksheets, grabs all the data, and dumps it out in nice, easy to munge CSV. The resulting CSV looks like this:

name,date,event,role,fee

Adrian Harewood,2014-04-24,Don't Quit your Day Job Fundraiser,Host,Unpaid

Heather Hiscox,2014-04-24,Canadian Medical Hall of Fame 20th Anniversary Gala,Host,Paid

Peter Mansbridge,2014-04-25,Canadian Centre for Male Survivors of Child Sexual Abuse Fundrasier,Speech,Unpaid

David Gray,2014-04-25,Canadian Centre for Male Survivors of Child Sexual Abuse Fundrasier,Host,Unpaid

Miyoung Lee,2014-04-25,RBC Top 25 Canadian Immigrant Awards,Host,Unpaid

All of the data in one file and in a format any program can read. (And with dates specified properly.) Perfect!

You can poke at this and dig into it with whatever tool you want—it’s easy to load into any spreadsheet program—but I like R, so I wrote a script that just dumps out some little text reports, host-activity.R:

#!/usr/bin/env Rscript

suppressMessages(library(dplyr))

library(reshape)

a <- read.csv("appearances.csv")

b <- a %>% select(name, fee) %>% group_by(name, fee) %>% summarise(count=n())

appearances <- cast(b, name~fee, fill = 0, value = "count")

appearances <- mutate(appearances, Total = Expenses + Paid + Unpaid, Paid.PerCent = 100 * round(Paid / Total, digits = 2))

print("Define as being 'busy' anyone who has more than this many appearances:")

busy.number <- mean(appearances$Total)

busy.number

print("Who is busy?")

busy <- subset(appearances, Total >= busy.number)

busy %>% arrange(desc(Total))

print("Of busy people, who has only done paid appearances?")

subset(busy, Paid.PerCent == 100) %>% arrange(desc(Total))

print("Of busy people, who has never done a paid appearance?")

subset(busy, Paid.PerCent == 0) %>% arrange(desc(Total))

print("Of the other busy people, who has some paid and some unpaid appearances?")

subset(busy, Paid.PerCent > 0 & Paid.PerCent < 100) %>% arrange(desc(Total))(If you get the data and run these commands one by one, you can examine the data frames at each step to see how the data gets simplified and reshaped.)

If you run it, it doesn’t make any fancy charts, it just tells you who’s been busy and how:

[1] "Define as being 'busy' anyone who has more than this many appearances:"

[1] 2.333333

[1] "Who is busy?"

name Expenses Paid Unpaid Total Paid.PerCent

1 Lucy van Oldenbarneveld 0 0 12 12 0

2 Adrian Harewood 0 0 10 10 0

3 Amanda Lang 0 8 0 8 100

4 Nora Young 0 6 2 8 75

5 Peter Mansbridge 0 4 4 8 50

6 Rex Murphy 0 8 0 8 100

7 Bob McDonald 0 4 3 7 57

8 Laurence Wall 0 0 7 7 0

9 Matt Galloway 0 0 7 7 0

10 Bruce Rainnie 0 0 6 6 0

11 Doug Dirks 0 1 5 6 17

12 Evan Solomon 0 3 3 6 50

13 Wendy Mesley 0 4 2 6 67

14 Craig Norris 0 0 5 5 0

15 Heather Hiscox 0 5 0 5 100

16 Miyoung Lee 0 0 5 5 0

17 Stephen Quinn 0 0 5 5 0

18 Brian Goldman 0 4 0 4 100

19 Jian Ghomeshi 0 4 0 4 100

20 Rick Cluff 0 0 4 4 0

21 Shelagh Rogers 0 0 4 4 0

22 Stephanie Domet 0 1 3 4 25

23 Tom Harrington 2 0 2 4 0

24 Anne-Marie Mediwake 0 0 3 3 0

25 Dave Brown 0 0 3 3 0

26 David Gray 0 0 3 3 0

27 Duncan McCue 0 1 2 3 33

28 Frank Cavallaro 0 0 3 3 0

29 Harry Forestell 0 0 3 3 0

30 Joanna Awa 0 0 3 3 0

31 Loren McGinnis 0 0 3 3 0

32 Margaret Gallagher 0 0 3 3 0

33 Mark Connolly 0 2 1 3 67

34 Mark Kelley 0 2 1 3 67

35 Matt Rainnie 0 0 3 3 0

36 Shane Foxman 0 0 3 3 0

37 Wendy Bergfeldt 0 0 3 3 0

[1] "Of busy people, who has only done paid appearances?"

name Expenses Paid Unpaid Total Paid.PerCent

1 Amanda Lang 0 8 0 8 100

2 Rex Murphy 0 8 0 8 100

3 Heather Hiscox 0 5 0 5 100

4 Brian Goldman 0 4 0 4 100

5 Jian Ghomeshi 0 4 0 4 100

[1] "Of busy people, who has never done a paid appearance?"

name Expenses Paid Unpaid Total Paid.PerCent

1 Lucy van Oldenbarneveld 0 0 12 12 0

2 Adrian Harewood 0 0 10 10 0

3 Laurence Wall 0 0 7 7 0

4 Matt Galloway 0 0 7 7 0

5 Bruce Rainnie 0 0 6 6 0

6 Craig Norris 0 0 5 5 0

7 Miyoung Lee 0 0 5 5 0

8 Stephen Quinn 0 0 5 5 0

9 Rick Cluff 0 0 4 4 0

10 Shelagh Rogers 0 0 4 4 0

11 Tom Harrington 2 0 2 4 0

12 Anne-Marie Mediwake 0 0 3 3 0

13 Dave Brown 0 0 3 3 0

14 David Gray 0 0 3 3 0

15 Frank Cavallaro 0 0 3 3 0

16 Harry Forestell 0 0 3 3 0

17 Joanna Awa 0 0 3 3 0

18 Loren McGinnis 0 0 3 3 0

19 Margaret Gallagher 0 0 3 3 0

20 Matt Rainnie 0 0 3 3 0

21 Shane Foxman 0 0 3 3 0

22 Wendy Bergfeldt 0 0 3 3 0

[1] "Of the other busy people, who has some paid and some unpaid appearances?"

name Expenses Paid Unpaid Total Paid.PerCent

1 Nora Young 0 6 2 8 75

2 Peter Mansbridge 0 4 4 8 50

3 Bob McDonald 0 4 3 7 57

4 Doug Dirks 0 1 5 6 17

5 Evan Solomon 0 3 3 6 50

6 Wendy Mesley 0 4 2 6 67

7 Stephanie Domet 0 1 3 4 25

8 Duncan McCue 0 1 2 3 33

9 Mark Connolly 0 2 1 3 67

10 Mark Kelley 0 2 1 3 67

Lucy van Oldenbarneveld was extra busy because she hosted six gigs at the Ottawa Jazz Festival.

Rex Murphy and Amanda Lang lead the ranks of people who have only done paid appearances so far. Where did they talk?

Rex Murphy:

- Association of Professional Engineers and Geoscientists of Alberta

- Canadian Association of Members of Public Utility Tribunals

- Building & Construction Trades Unions (Canadian Office)

- CGOV Asset Management

- Jewish National Fund of Canada

- Association of Canadian Community Colleges

- Fort McMurray Chamber of Commerce: conference

- Canadian Taxpayers Federation

Amanda Lang:

- Canadian Hotel Investment Conference

- BASF Canada

- Canadian Restaurant Investment Summit

- Young Presidents Organization

- Alberta Urban Development Institute

- GeoConvention Show Calgary

- Manulife Asset Management Seminar

- Manulife Asset Management Seminar

Hmm. Both generally businessy, and tilting Alberta-wards. Compare to Toronto radio host Matt Galloway, all of whose seven appearances were unpaid:

- Food on Film - TIFF Series (3 of 6)

- Toronto Region Immigrant Employment Council 10th Anniversary & Immigrant Success Awards

- Food on Film - TIFF Series (4 of 6)

- Dundas West Fest Lulaworld Stage

- YorkTown Family Services Dinner

- Toronto Community Foundation Vital Toronto Celebration

- Toronto International Film Festival Food on Film Series

The listings start on 24 April, so there’s only two months and a bit worth of data in the feeds right now, but it’s a good start. It will be interesting to watch how things change over the course of the year. I’ll rerun the script every now and then and if anything looks interesting, I’ll post about it. Of course, going deeper than this, it will be important to see if anyone seems to be in a conflict of interest.

AWE 2014 Thoughts

Here’s the fifth (first, second, third, fourth and final post about Augmented World Expo 2014.

Themes

Context and storytelling were two common themes at the conference. I think even Ronald Azuma, whose 1997 definition of AR still stands (“1. Combines real and virtual; 2. Interactive in real time; 3. Registered in 3-D”), mentioned context as a key ingredient—you can certainly have a system that meets those three criteria but is still uninteresting or unusable.

I get the sense that for all the innovation and research going on in AR right now, for vendors there are two different themes: the two ways they can make money: marketing and industry. No one normal is pulling out their phone and calling up an AR view of what’s around them. The hardware makes it a bit of a chore, the software is siloed, and most of the content isn’t particularly interesting or could be seen better on a map.

With advertising and marketing—through “interactive print” or enhanced billboards, posters and catalogues—there’s a reason, a need, for someone to run the AR app: enhanced product information, a chance to win something, etc. The user chooses to use AR because they get something.

With industrial applications—common examples are of finding things in a warehouse (imagine yourself looking for something on those shelves at the end of your odyssey through Ikea) or of working on engines or other complex machinery. The user wants to or is is told to wear the glasses or use the app, because it makes work easier or them more productive.

Things will change. The research and new technology and the context and storytelling will make better AR. But it will be a long while before regular folks are wearing daily.

Privacy

“Got about 45 seconds to argue about privacy,” Robert Scoble said in his keynote.

I was amazed by the lack of discussion of privacy at the conference. Perhaps I shouldn’t have been. The vendors don’t want to talk about it. Why would they? It would just turn people off. Will there be more at the academic conference this summer, ISMAR 2014? I hope so.

The closing panel discussion The 3 ‘P’s of the Future Augmented World—Predictions, Privacy, & Pervasiveness had some good commentary in it—and some I’d take strong exception to—but the privacy was all centred around people observing other people. Rob Manson tried to change the direction by asking a question about when the problem is “deeper than wearables: biological, like pacemakers” and was cavalierly dismissed: right now cars can be hacked, planes can be hacked, they’re all computers, everything can be hacked, that’s old news. I wish Karen Sandler had been there to talk to that: she’s an expert on free software, she wears an implanted device on her heart, and she wrote Killed by Code: Software Transparency in Implantable Medical Devices.

“I want to help frame the conversation before someone else frames it for us,” said Robert Hernandez. The conversation has been framed. It was framed by Edward Snowden and his whistleblowing revelations.

There are two sides to this in augmented reality.

First, what the companies know. Running an app can mean sharing location information, the camera view, and more. Who are the companies, what are their privacy policies, are they using encryption on all communications, how secure are their servers? Privacy on smartphones is a nightmare anyway; installing proprietary apps from unfamiliar companies and granting them access to who knows what so you can see an auggie on a movie poster just makes it worse.

Second, what the spy agencies know. Assume that every AR device has been broken by them. We know they can listen through your device’s microphone without you knowing. We know they’ve got back doors and taps into pretty much everything. Assume they’ve broken Glass and any other wearable, and they can listen to the microphone and see the camera feed whenever they want without the wearer knowing. They know where the wearer is, they know what’s being seen and heard, they’ve got it all, and they can tie it in with everything else they know. This is what everyone in AR needs to remember and to work against.

You don’t give up easy on this. You fight it, because privacy is a right and we need to defend it. What “privacy” means may be changing, but it doesn’t include the state recording everything everyone sees from head-mounted cameras.

What can we do about this? How can we use Tor for AR? Who’s working on privacy and AR?

Free as in freedom

One of the parts of the solutions to the privacy problem is free software. There is little free software in AR right now. The AR Standards Community is working on open standards and interoperability, and those are crucial to all of this work, but not just the standards but the implementations need to be free and open.

The only FOSS AR browser I know is Mixare (there is also an iOS version), which is along the lines of Layar or Junaio. It’s under GPL v3. The project’s gone dormant, but the code’s there if people want to go back to it.

There are a number of point of interest providers for Layar and similar browsers out there, like my own Avoirdupois (GPL v3), which is a fairly straightforward web service written in Ruby with Sinatra. It will be simple to make it feed out POIs in ARML or any other open format.

There is OpenCV for computer vision: “OpenCV was built to provide a common infrastructure for computer vision applications and to accelerate the use of machine perception in the commercial products.” It’s under the three-clause BSD license.

But the best possibility out now is awe.js, the “jQuery for the Augmented Web” (MIT License). I wrote about it back in January. Here’s a nice demo video.

That’s great work! Check out the other videos BuildAR has made showing the augmented web in action.

I’ll be hacking on awe.js, and I hope others will too. Build more free and open source augmented reality software! Server-side, client-side, libraries, apps, whatever. The quickest route is through the web, the way awe.js is doing it. Web browsers can get access to most (soon all) of the sensors and data sources on a device (with permission from the user) and do AR in a browser window. Amazing! The standards that let the browser talk to the device are free and open, the standards that make the web work are free and open, and the software that builds the browser is (I hope) free and open. Then the browsers can be deployed on any platform, smartphone or tablet or glass or gesture-recognition system. Everything has a web browser in it. The platforms are probably proprietary but that may change.

The augmented web is a good idea for two reasons. First and more practically, when the web hackers get on something it will grow quickly. You’ve got to be pretty devoted to write an app for Android or iOS, and the entire culture there seems to encourage people to keep the work proprietary. But hacking some web stuff, that’s much easier and much more fun, and the environment is much freer and more open. When someone makes a WordPress plugin or Drupal extension to make using awe.js drag-and-drop easy, bingo, instant widespread deployment. Second and more fundamentally, we all need to control and own this ourselves. We can’t give away so much information and power over our daily lives to organizations.

I want glasses or lenses I can wear when I choose to see what I want. I don’t want to see a corporate-branded view of life knowing everything around me is being fed back to the spy agencies.

Julian Oliver and Critical Engineeringg

I saw mentions of Julian Oliver’s glasshole.sh script recently. It begins:

#!/bin/bash

#

# GLASSHOLE.SH

#

# Find and kick Google Glass devices from your local wireless network. Requires

# 'beep', 'arp-scan', 'aircrack-ng' and a GNU/Linux host. Put on a BeagleBone

# black or Raspberry Pi. Plug in a good USB wireless NIC (like the TL-WN722N)

# and wear it, hide it in your workplace or your exhibition.

#

# Save as glasshole.sh, 'chmod +x glasshole.sh' and exec as follows:

#

# sudo ./glasshole.sh <WIRELESS NIC> <BSSID OF ACCESS POINT>I assumed Oliver was a provocative hacker. He is, but he’s artist. He’s a provocative hacker artist. And that script is some provocative art.

I didn’t know of Oliver’s work before, but his web site is full of marvellous technological art projects, like Solitary Confinement:

Discovered in June 2010, the Stuxnet Virus is considered a major cyber weapon.

Initially spreading via Microsoft Windows, it targets industrial software and equipment and is the first discovered malware that spies on and subverts industrial systems. Its primary target is the Siemens Simatic S7-300 PLC CPU, commonly found in large scale industrial sites, including nuclear facilities. Most evidence suggests this virus is a U.S/Israeli project designed to sabotage Iranian uranium enrichment programs.

Solitary Confinement is a small, non-interactive installation comprising a computer quarantined within a glass vitrine that has been deliberately infected with the Stuxnet Virus. It sits in a vitrine, powered on, with one end of a red ethernet network cable that is connected to the gallery network laying unplugged near the port on the PC.

There’s background in Binary Operations: Stuxnet.exe:

- Stuxnet held captive within the image of its target, then buried.

As mentioned above, Stuxnet is designed to target the Siemens S7-300 system. Here I have directly put Stuxnet inside the image of its target, using a steganography program, embedded using the encryption algorithm Rijndael, with a key size of 256 bits. The Stuxnet worm I embedded is called ‘malware.exe’ and has md5sum 016169ebebf1cec2aad6c7f0d0ee9026. Here is a virus report on files with this md5sum.

Clicking on the below image will take you to a 9.7M JPEG image in which Stuxnet is embedded.

Or the Transparency Grenade—there are lots of pictures here, and you can download the files necessary to make your own:

The lack of Corporate and Governmental transparency has been a topic of much controversy in recent years, yet our only tool for encouraging greater openness is the slow, tedious process of policy reform.

Presented in the form of a Soviet F1 Hand Grenade, the Transparency Grenade is an iconic cure for these frustrations, making the process of leaking information from closed meetings as easy as pulling a pin.

Equipped with a tiny computer, microphone and powerful wireless antenna, the Transparency Grenade captures network traffic and audio at the site and securely and anonymously streams it to a dedicated server where it is mined for information. User names, hostnames, IP addresses, unencrypted email fragments, web pages, images and voice extracted from this data and then presented on an online, public map, shown at the location of the detonation.

It’s all well worth browsing.

Oliver’s a critical engineer. The group gives workshops, intensive week-long courses about Unix and networking—check out the description of the NETworkshop, for example:

A small scale model of the Internet is created in class for the purposes of study with which we interact over another self-built local network. By learning about routing, addressing, core protocols, network analysis, network packet capture and dissection, students become dexterous and empowered users of computer networks. At this point students are able to read and traverse wide (the Internet) and local area networks with agility, using methods and tools traditionally the domain of experienced network administrators, hackers and security experts.

In the second phase of the workshop students learn to read network topologies as political control structures, seeing how corporations and governments shape and control the way we use computer networks.

Students learn to study these power structures by tracing the flow of packets as they pass over land and sea.

Macro-economic and geostrategic speculations are made.

Finally, encryption and anonymity strategies and theory are addressed, with a mind to defending and asserting the same basic civil rights we uphold in public space.

That is a serious workshop. No previous knowledge required, either. Artists (or anyone) coming out after that week must have their brains on fire.

In the library world we never stop discussing what gets taught in library schools. (I suppose no field ever does.) I wish schools would have a mandatory IT course like that workshop. (Does the University of Toronto iSchool really have no mandatory IT course for its general LIS stream?)

Oliver, Gordan Savičić and Danja Vasiliev wrote The Critical Engineering Manifesto:

- The Critical Engineer considers Engineering to be the most transformative language of our time, shaping the way we move, communicate and think. It is the work of the Critical Engineer to study and exploit this language, exposing its influence.

- The Critical Engineer considers any technology depended upon to be both a challenge and a threat. The greater the dependence on a technology the greater the need to study and expose its inner workings, regardless of ownership or legal provision.

- The Critical Engineer raises awareness that with each technological advance our techno-political literacy is challenged.

- The Critical Engineer deconstructs and incites suspicion of rich user experiences.

- The Critical Engineer looks beyond the “awe of implementation” to determine methods of influence and their specific effects.

- The Critical Engineer recognises that each work of engineering engineers its user, proportional to that user’s dependency upon it.

- The Critical Engineer expands “machine” to describe interrelationships encompassing devices, bodies, agents, forces and networks.

- The Critical Engineer observes the space between the production and consumption of technology. Acting rapidly to changes in this space, the Critical Engineer serves to expose moments of imbalance and deception.

- The Critical Engineer looks to the history of art, architecture, activism, philosophy and invention and finds exemplary works of Critical Engineering. Strategies, ideas and agendas from these disciplines will be adopted, re-purposed and deployed.

- The Critical Engineer notes that written code expands into social and psychological realms, regulating behaviour between people and the machines they interact with. By understanding this, the Critical Engineer seeks to reconstruct user-constraints and social action through means of digital excavation.

- The Critical Engineer considers the exploit to be the most desirable form of exposure.

RUSTLE

I recently came across IndieWebCamp and the approach appealed to me. Giving over content to companies like Facebook or Twitter isn’t the way forward. I like controlling my own site, and I like hacking on stuff.

On the technical side of things, I can write something that would let me write a short note here and then push it out to Twitter or somewhere else, but what about webmentions? I use Jekyll to make this site, and everything is completely static. If I used WordPress or something dynamic then I could have a service that accepted incoming information that said “this other web page just linked to you” and push that into a database. But I don’t. What can I do? I could write a little web service. Or I could use an external system, but I don’t much like that idea.

I thought about REST, representational state transfer, “a way to create, read, update or delete information on a server using simple HTTP calls.” Beginning to understand REST changed how I thought about the web.

But does it have to be instantaneous? What about delayed REST? Seems unlikely this idea is new, but I had a thought about a sort of dead drop approach, which I called RUSTLE: REST Updated Some Time Later … Eventually.

Here’s how it could work:

- Other web site calls a URL on my site, doing a POST to create something or a PUT to update something.

- Apache’s

.htaccess(or the equivalent in whatever you use) sees it, makes sure all the incoming information is written to a log file even if it’s not in a GET, and sends back a 200 COOL or 200 THANKS. Aside from that, nothing happens. - Every so often a script examines my log file and determines any actions that need to be done.

- The actions are done (e.g. a “URL linked to this page” is appended to a text file) and any necessary files are updated.

This way security dangers are limited and programming is easier. Instead of requiring some fancy robust cacheing web service, you can get away with anything down to a shell script and a cron job.

The web is always in a hurry. Everybody and everything expects an answer immediately. Let’s mellow out and RUSTLE something up when we feel like it.

Uses Emacs

There was a new one at up The Setup, which got me wondering about the Emacs users they’ve profiled. I think this is them all:

- Joe Armstrong: “Emacs, make and bash for all programming.”

- Mary Rose Cook: “There are three reasons to use emacs. One, it is available on a lot of platforms. Two, it can be used for almost any task. Three, it is very customisable. I only take advantage of reason three. From this perspective, using emacs is kind of like making a piece of art. You start with a big block and you slowly chip away, bringing it closer and closer to what you want.”

- Kieran Healy: “I do most of my writing and all of my data analysis in Emacs 23, which I run full-screen on the left-hand monitor (usually split into a couple of windows).”

- Phil Hagelberg: “I do as much as I can in GNU Emacs since it pains me to use monolithic software that can’t be modified at runtime.”

- Eric S Raymond: “Most of my screen time is spent in a terminal emulator, Emacs, and Firefox.”

- Richard Stallman: “I spend most of my time using Emacs.”

- Benjamin Mako Hill: “People complain about Emacs but I’m actually pretty happy with it.”

- Andrew Plotkin: “I always have Emacs up, in one Terminal. ”

- Christian Neukirchen: “Emacs is for almost all development and dealing with mail as well as writing longer texts. I prefer vi for administrative tasks and on remote machines, where I have no configuration of my own.”

- Kate Matsudaira: “Emacs - I have tried moving to Eclipse, vi, and several others, but since I know Lisp well (it was one of the first programming languages I learned!) I can do pretty much anything in Emacs.”

- John MacFarlane: “I use Emacs for just a few things – browsing compressed archives, for example, and maintaining a todo list with org-mode. Evil-mode makes Emacs bearable.”

- Tamas Kemenczy: “Emacs is home sweet home and I use it across the board for development and pretty much anything text-related.”

- Paul Tweedy: “My .emacs file is a carefully-curated history of customisations, workarounds, hacks and blatant bad habits that I’ve grown to depend on over the years.”

- Mark Pilgrim: “Emacs 23 full-screen on the center (widescreen) monitor. I have relatively few Emacs customizations, but the big one is ido-mode, which I just discovered this year (thanks Emacs subreddit).”

- Jonathan Corbet: “The way it usually settles out is that I use emacs when I’m running as my self and vi when I’m doing stuff as root.”

- Aaron Boodman: “Over time, those constraints have whittled my work environment down to the following simple tools: Ubuntu Linux, emacs -nw (terminal mode), Screen, Irssi, Git.

- Mike Fogus: "However, within the past six months I’ve moved completely to Emacs org-mode with org-mode-babel. I can’t believe that I didn’t switch earlier.”

- Seth Kenlon: “I write a lot, almost always in XML, so I use Emacs in nxml-mode. I use the Docbook schema and process it with xmlto or xsltproc. I also use Emacs for screenplays, organizing tasks, web design, and coding.”

- Neha Narula: “On my research machine I basically live inside Chrome, emacs, a terminal, and git.”

- Bret Taylor: “I write all my code in Emacs, and I despise IDEs.”

- Brad Fitzpatrick: “Linux (Ubuntu and Debian), Chrome, emacs, screen, Go, Perl, gcc, Gimp, tcpdump, wireshark, Xen, KVM, libvirt. etc.”

- John Myles White: “If I’m working only at the command-line, I’ll use emacs.”

- Chris Wanstrath: “I write code and prose in Aquamacs. I’ve even written a few plugins ("modes”) for it: textmate.el and coffee-mode.“

- Adewale Oshineye: "However I always end up going back to Emacs (AquaMacs on OSX, vanilla Emacs on Linux and NTEmacs in the days when I used Windows), IntelliJ and TextMate.”

- Dhanji Prasanna: “In the Vi/Emacs wars I don’t take sides, I was probably the only Googler who used both equally (and a lot).”

- Tim Bray: “Creative time is spent in Aquamacs Emacs (blogging, coding in languages with poor IDE support like Perl and Erlang), Eclipse (Android development), NetBeans (Java, Ruby, Clojure, and C development), and Gmail (via a Fluidized Safari).”

- Liza Daly: “I’m the only one on the team who uses emacs, but that’s what I learned in college. My brain doesn’t take to GUI development environments.”

- Jason Rohrer: “I code using Emacs, and I compile in the terminal using GNU makefiles and GCC.”

- Josh Nimoy: “I use emacs as my IDE, splitting the editor in half and having eshell in the top window to run the Makefile.”

- John Baez: “I spend a lot of time writing papers and preparing talks with free software: emacs for text editing, LaTeX for typesetting, and IrfanView for editing images. I blog using Wordpress and Google+. Nothing fancy, since I want to focus on the ideas.”

- Hugo Liu: “I like to hack in Python using Emacs in a Terminal window.”

- Daniel Stenberg: “Apart from the OS, I’m a C coder and an Emacs user, and what more do you need?”

- Andrei Alexandrescu: “Anyhow, on a regular basis I use OpenNX to connect remotely to big iron - a blade running CentOS 5.2 - and run emacs remotely for editing.”

- Dana Contreras: “When I need a terminal-based editor, I use Emacs, like god intended.”

- Geoffrey Grosenbach: “Emacs for text editing. Most of the time I don’t know what my fingers are typing. They just do what I’m thinking. To me, that’s the definition of a great text editor.”

- Gabriel Weinberg: “On the server, I’m usually in emacs and messing around with git and nginx.”

- Matthew Mckeon: “My platform for data munging scripts is mostly Ruby these days, however Emacs is my dirty secret.”

- Ben Fry: “Most of my time is spent with Emacs, Processing, Eclipse, Adobe Illustrator and Photoshop CS3.”

- Jonathan Foote: “My tools have not changed much since grad school: emacs for coding and print statements for debugging.”

- Chris Slowe: “Last but not least, the software I actually run at any given time is 90% Emacs, Terminal, and Chrome.”

- Ted Leung: “I typically run tools from the command line - zsh in my case, and do my text editing in Emacs.”

- Andrew Huang: “For day to day stuff, I use emacs for text editing, Firefox for my web browser, and Windows Media Player for music.”

M-x all-praise-emacs

AWE 2014 Glasses

Here’s the fourth (first, second, third) and penultimate post about the Augmented World Expo 2014. This is about the glasses and headsets—the wearables, as everyone there called them. This was all new to me and I was very happy to finally have a chance to try on all the new hardware that’s coming out.

What’s going on with the glasses is incredible. The technology is very advanced and it’s getting better every year. On the other hand, it is all completely and utterly distinguishable from magic. Eventually it won’t be, but from what I saw on the exhibit floor, no normal person is ever going to wear any glasses in day-to-day life. Eventually people will be wearing something (I hope contact lenses) but for now, no.

I include Google Glass with that, because anyone wearing them now isn’t normal. Rob Manson let me try on his Glass. My reaction: “That’s it?” “That’s it,” he said. (Interesting to note that there seemed to be no corporate Google presence at the conference at all. Lots of people wearing Glass, though. I hope they won’t think me rude if I say that four out of five Glass wearers, possibly nine out of ten (Rob not being one of those), look like smug bastards. They probably aren’t smug bastards, they just look it.) Now, I only wore the thing for a couple of minutes, but given all the hype and the fancy videos, I was expecting a lot more.

I was also expecting a lot more from Epson’s Moverio BT-200s and Meta’s 01 glasses.

Metaio has their system working on the BT-200s and I tried that out. In the exhibit hall (nice exhibit, very friendly and knowledgeable staff, lots of visitors) they had an engine up on a table and an AR maintenance app running on a tablet and the BT-200s. On the tablet the app looked very good: from all angles it recognized the parts of the engine and could highlight pieces (I assume they’d loaded in the CAD model) and aim arrows at the right part. A fine example of industrial applications, which a lot of AR work seems to be right now. I was surprised by the view in the glasses and how, well, crude it is. The cameras in the glasses were seeing what was in front of me and displaying that, with augments, on a sort of low-quality semitransparent video display floating a meter in front of my face. The resolution is 960 x 540, and Epson says the “perceived screen is equivalent to viewing an 80-inch image from 16.4 feet or 5 meters away.” I can’t see specs online that say what the field of view is as an angle, but it was something like 40%. Your fist at arm’s length is 10°, so image a rather grainy video four fists wide and two fists high, at arm’s length. The engine is shown with the augments, but it’s not exactly lined up with the real engine, so you’re seeing both at the same time. Hard to visualize this, I know.

Meta had a VIP party on the Wednesday night at the company mansion.

The glasses are built at a rented $US15 million ($16.7 million), 80,937 square metre estate in Portola Valley, California, complete with pool, tennis court and 30-staff mansion. — Sydney Morning Herald

He now lives and works with 25 employees in a Los Altos mansion overlooking Silicon Valley and has four other properties where employees sleep at night.

“We all live together, work together, eat together,” he said. “At certain key points of the year we have mattresses lined all around the living room.” — CNN

On the exhibit floor they had a small space off in the corner, masked with black curtains and identifiable by the META logo and the line-up of people waiting to get in to try the glasses. I lined up, waited, chatted a bit with people near me, and eventually I was ushered into their chamber of mystery and a friendly fellow put the glasses (really a headset right now) on my head and ran me through the demo.

The field of view again was surprisingly small. There was no fancy animation going on, it was just outlined shapes and glowing orbs (if I recall correctly). I’d reach out to grab and move objects, or look to shoot them down, that sort of thing. As a piece of technology under construction, it was impressive, but in no way did it match the hype I’ve seen.

Now, my experience lasted about three minutes, and I’m no engineer or cyborg, and the tech will be much further along when it goes on sale next year, but I admit I was expecting to see something close to the videos they make. That video shows the Pro version, selling for $3,650 USD, so I expect it will be much fancier, but still. They say the field of view is 15 times that of Google Glass … if that’s five times wider and three times higher, it’s still not much, because Glass’s display seems very small. The demo reels must show the AR view filling up the entire screen whereas in real life it would only be a small part of your overall view.

That said, the Meta glasses do show a full 3d immersive environment (albeit a small one). They’re not just showing a video floating in midair. Even if the Meta glasses start off working with just a sort of small box in front of you, you’re really grabbing and moving virtual objects in the air in front of you. That is impressive. And it will only get better, and fast.

I had a brief visit to the Lumus Optical booth, where I tried on a very nice pair of glasses (DK40, I think) with a rich heads-up display. I’m not sure how gaffed it was as a demo, but in my right eye I was seeing a flight controller’s view of airplanes nearby. I could look all around, 360°, up and down, and see little plane icons out there. If I looked at one for a moment it zoomed in on it and popped up a window with all the information about it: airline, flight number, destination, airplane model, etc. The two people at the booth were busy with other people so I wasn’t able to ask them any questions. In my brief test, I liked these glasses: they were trying to do less than the others, but they succeeded better. I’d like to try an astronomy app with a display like this.

Mark Billinghurst’s HitLabNZ had a booth and they were showing their work. They’d taken two depth-sensing cameras and stuck them onto the front of an Oculus Rift: the Rift gives great video and rendered virtual objects and the cameras locate the hands and things in the real world. Combined you get a nice system. I tried it out and it was also impressive, but it would have taken me a while longer to get a decent sense of where things are in the virtual space, I was never reaching out far enough. This lab is doing a lot of interesting research and advancing the field.

I also a similar hack, an Oculus Rift with a Leap Motion depth sensor duct-taped to the front of it. Leap Motion has just released a new version of the software (works on the same hardware) and the new skeletal tracking is very impressive. I don’t have any pictures of that and I don’t see any demo videos online, but it worked very well. If you fold your hand up into a fist, it knows.

Here’s a demo video. Imagine that instead of seeing all that on a screen in front of you, you’re wearing a headset (an Oculus) and it’s all floating around in front of you, depth and all, and you can look in any direction. It’s impressive.

AWE 2014 Day Two

Thursday 29 May 2014 was the second and last day of the Augmented World Expo 2014. (Previously: my notes on the preconference day and first day).

Since no one was interested in libraries at the Birds of a Feather session the day before, this time I decided to try FOSS. I grabbed a large card and a marker at the table outside the room. “What’s your table about?” said a fellow sitting there. “Free and open source software,” I said. “I really like SketchUp,” he said. The silence went on uncomfortably long as I tried to figure out what to say, but then we agreed it was an impressive tool, and that designing anything more than a cube could be pretty hard.

Tables about oil and gas, health, manufacturing and venture capital filled up, but no one came to talk about free software, though an English literature professor took pity on me and we had a great talk about some projects he’s doing.

The opening keynote was by Hiroshi Ishii, the head of the Tangible Media Group at MIT’s Media Lab. It doesn’t seem to be on YouTube, but he gave the same talk at Solid eight days before. He’s working on Radical Atoms: “a computationally transformable and reconfigurable material that is bidirectionally coupled with an underlying digital model (bits) so that dynamic changes of physical form can be reflected in digital states in real time, and vice versa… We no longer think of designing the interface, but rather of the interface itself as material.” That doesn’t come anywhere near to describing how remarkable their work is.

The talk was an absolute delight.

The Inform especially amazed me. Watch the video there.

Next was something completely different that turned out to be bizarrely strange: Tim Chang, who works at the Mayfield Fund, a venture capital firm, where he is “a proven venture investor and experienced global executive, known for his thought leadership in Gamification, Quantified Self, and Behavioral/Social Science-led Design,” with a talk called “Building the Tech-Enabled Soul.” Here are some quotes.

“Do you remember the hours you spent crafting your characters [in role-playing games]? You’d take all the traits you wanted to min/max, whether it was strength vs dexterity or intelligence vs wisdom, you’d unlock skill trees and you’d think about how you want to architect your character. I remember when I was in my twenties, it kind of hit me like a blinding flash of the obvious: how come we don’t architect our own lives the way we spend all that time crafting perfect little avatars? That kind of opened me up to this idea of gamification, which is that life itself is a grand game.”

I was shaking my head at this, but then he talked about how thinking of everything in life as a game led him to the quantified self:

“I’ve been body-hacking for a couple of years.”

It was about here that I stopped taking notes and just stared in amazement.

“Prosthetics for the brain. Sounds kind of crazy but we’re already there.”

“How many of you saw the movie Her? I thought that was pretty fantastic.”

At 13:23 it took a wonderful turn to the weird: he got into Borges and The Library of Babel. I was not expecting this. Or any of what followed.

“They were able to extract the smell and the colour of cherries and bananas, which are pretty well known DNA chains, and they were able to mix them into E. coli and other types of organisms, such that you could feed it to your pet and your pet would produce really pleasant-smelling and -coloured excrement.”

“There is a polo horse breeder in Argentina … [who will] use IVF and go create lots of attempts at mutant super polo horses.”

“I want to create the Library of Babel in every possible chain of As, Cs, Ts and Gs.”

“Perhaps that’s what the universe is, a giant kind of Monte Carlo experiment running every possible permutation of all these sorts of things in all configurations, and that there’s many universes running this experiment over and over again.”

“The next step is that as the actuators begin, as we are able to hack our wetware, as we understand our neuroelectrical interfaces of our bodies, I think our fate is to really transcend our physical form and really go beyond that, and figure out what it is that we want to become if all states are possible. Again, this is sounding really really out there.”

The bit on Korean plastic surgery at 17:47 you’ll have to see for yourself.

“I think our memories are going to be up for grabs as well.”

“What I think the holy grail is, is can these technologies be used as an engine for perfect empathy. I don’t want you to tell me what it’s like to be you, I want to know exactly what it feels like to be you.”

“As we become that networked consciousness that everybody talks about, we will become this sort of more global interconnected species. My own theories are that at that point we’ll be welcomed to the club and there will be lots of other species who have way transcended and say, ‘Welcome to the club!’ And then we’ll all link and do that at a galactic inter-species scale and on and on and on it goes.”

“Are we a particle, are we a wave? Property rights vs open source?”

Twenty-five minutes of jaw-dropping astonishment. I read a fair bit of SF. We live in SF now. I just wasn’t expecting to hear this from someone at a company with hundreds of millions of dollars on hand to try to hurry us along to the Singularity.

Next I went to the panel on designing hardware for the interactive world.

Jan Rabaey of the SWARM Lab at UC Berkeley (requires cookies) talked about ubiquitous computing, when devices are all around us and all connected and form a platform. (Continuing on from Chang, this reminded me of Vinge’s localizers and smart dust in A Deepness in the Sky.) Wearables are here in a beginning way. But there’s a problem: stovepiping. Every device a company makes will only talk to other devices from that company. It’s short-term, incompatible and unscalable. (And one thing that made the internet spread so widely is that it works the opposite way.) His solution is the “human internet:” devices on, around and inside you.

This interview with Rabaey goes into all this more, I think.

Interviewer: How exactly do you deliver neural dust into the cortex of the brain?

Rabaey: Ah, good question.

He recommended Pandora’s Star by Peter Hamilton.

Next was Amber Case (the director of ESRI’s R&D Center) on Calm Technology. It’s quite entertaining and pleasantly sarcastic, like the bit about “the dystopian kitchen of the future.” Worth watching. She has made The Coming Age of Calm Technology by Mark Weiser and John Seely Brown available on the calm technology web site she’s just launched.

How can AR be used in a calm unintrusive way? Ads and notifications popping up everywhere is exactly what I don’t want. In fact an AR version of Adblock Plus, to hide ads in the real world, would be very nice.

The last session of the morning started with “Making AR Real” by Steven Feiner, who teaches at Columbia and was another of the top AR people at the conference. If you haven’t read his 2002 Scientific American article Augmented Reality: A New Way of Seeing then have a look.

He showed some eyewear from the past few years, and then what we have now: current glasses are “see-through head-worn displays++,” in that they’re displays with at least one of an orientation tracker, input device, RGB camera, depth camera, computer or radio. Why didn’t all that come together in 1999? The pieces were there … but nothing had the power, and there was no networking possible (no fast mobile data like we have now) and hardly any content to see. The milieu was missing then, but it’s here now.

Idea: collaborative tracking. People could share and accept information from their sensors with people around them, because everyone will have sensors.

How will we manage all the windows? Systems now look like they did 40 years ago, or more, back to the Mother of All Demos. He and colleagues had the idea of environment management: “AR as computation æther embedding other tracked displays and the environment.” Move information from eyewear to a display, from a display to eyewear, or between two displays and see it midway on the eyewear. “How do we control/manage/coordinate what information goes where?” Where do we put things? Head space, body space, device space, world space? This needs to be researched. (How to keep all this calm, too, I wonder?)

Right after Feiner was a former student of his, Sean White (@seanwhite) who now works at Greylock, another Silicon Valley venture capital fund, but this talk (“The Role of Wearables in Augmenting Worlds”) was practical and level-headed, nothing like Chang’s. He gave six things to think about when building an AR system:

- Why is it wearable/augmented?

- Microinteractions (mentioned Dan Ashbrook and his dissertation): how long to do something; 4s from initiation to completion

- Ready-to-hand vs present-at-hand (Heidegger, Winograd): example of holding a hammer and nailing in a nail

- Dispositions (Lyons and Profita): pose and relationship of device to user; resources; transitions cost; wearing Glass around the neck

- Aesthetics, form and self-expression (Donald Norman): emotion design, visceral, behavioural, reflective. Eyeglasses reflect who we are.

- Systems, not just objects: not just the artifact, but how it relates to other things.

I wasn’t expecting Heidegger to come up. Here I was at a high-tech conference where a good number of people were wearing cameras, but Borges and Heidegger were mentioned before Snowden.

There was more that day, including a fairly poor closing talk and panel and one of the most memorable musical performances I’ve ever seen, but I’ll leave it that for now.

AWE 2014 Day One

Wednesday 28 May 2014 was the first day of Augmented World Expo 2014, and here are my notes on that, following on the AR standards preconference the day before, and skipping over the morning’s keynote where Robert Scoble was utterly unconcerned about privacy. The AugmentedRealityorg channel on YouTube has all the videos. (Congratulations again to them for making all the talks available for free, and so quickly.)

There was a birds of a feather session at 8 am. I put out a sign at a table: LIBRARIES. No one sat down until three fellows came along and needed a place to sit because all the other tables were full. They worked for DARPA and were modifying Android devices so soldiers could use and wear them in the field. It was the first time anyone ever said MilSpec to me. “This isn’t like my usual library conferences,” I thought.

At nine things got started when Ori Inbar (@comogard) kicked things off with an enthusiastic welcome. He’s done years of hard work on this event and AR, and he was very warmly received. He set out five trends in AR right now:

- from gimmick to value

- from mobile to wearable (10 companies bringing glasses to market this year)

- from consumer to enterprise

- from GPS to 3Difying the world

- the new new interface

Then co-organizer Tish Shute (@tishshute) followed up. She mentioned “strong AR” (a combination of full eyewear and gestures) and wireless body area networks (new to me). Then there was Scoble and then Nir Eyal, who gave a confident AR-free talk about how to apply behaviourism to marketing to make more money.

But then it was Ronald Azuma! It was he who in 1997 (!) in A Survey of Augmented Reality set out the three characteristics a system must have to be augmented reality, and this definition still holds:

- Combines real and virtual;

- Interactive in real time;

- Registered in 3-D.

He’s working at Intel now, and gave a talk called “Leviathan: Inspiring New Forms of Storytelling via Augmented Reality.” The Leviathan is the Scott Westerfeld novel, and Westerfeld wrote about the Intel Leviathan Project.

He listed four approaches to memorable and involving AR:

- take content that is already compelling and bring it into our world (as they did)

- reinforcing: like 110 Stories, Westwood Experience

- reskinning: take what reality offers and twist it, convert world around you to fictional environment, e.g. belief circles in Rainbows End

- remembering: much of the world is mundane, but what happened there is not; some memories are important to everyone, some to just you

A few talks later was Mark Billinghurst from the Human Interface Technology Lab New Zealand. He said he wanted to make Iron Man real, with a contact lens display, free-space hand and body tracking and speech/gesture recognition. (Iron Man came up several times at the conference, more than Rainbows End.) He talked about research they’ve done on multimodal interfaces, which work with both voice and gesture. Gesturing is best for some tasks, but combined with voice, the two complement each other well. A usability study of multimodal input in an augmented reality environment (Virtual Reality 17:4, November 2013) has it all, I think.

In the afternoon there was a great session on art and museums. The academics (like Azuma and Billinghurst, and Ishii the next day) and the artists were doing the most interesting and exciting work I saw at the conference. Most of the vendors just played videos from their web sites. The artists and academics are pushing things, trying new things, seeing what breaks, and always doing it with a critical eye and thinking about what it means in the world.

First was B.C. Biermann, who asked, “How can citizens make incursions into public spaces?” He posed an AR hypothesis: “Can emerging technologies, and AR in particular, democratize access to public, typically urban spaces, form a new mode of communication, and give a voice to private citizens and artists?” He’s done some really interesting work, some as part of Re+Public.

He ended with this question, which made me think about how art has changed, or perhaps how it hasn’t:

How can we use this technology for the benefit of the collective as opposed to an individualistic, material-seeking profit-seeking enterprise? Not that that’s necessarily a bad thing, but I think that technology can also be used in both verticals, a beneficial collective vertical and also maybe one that is monetizable.

Then Patrick Lichty spoke on “ARt: Crossing Boundaries.” He ran through a “laundry list of things that are on my scholarly radar,” covering a lot of ground quickly. There’s much good stuff to be seen here, too much to pull out and list, but watch it for a solid fifteen-minute overview of AR and art.

Nicholas Henchoz from EPFL-ECAL in Lausanne was next, with “Is AR the Next Media?”

Some (fragmentary?) quotes I wrote down:

- “Visual language and narrative principles for digital content. Relation between the real and the virtual world.”

- “Continuum in the perception of materiality. Direct link with the physical world. Credibility of an animated object. Real-time perception.”

- “The space around the object is also part of the experience.”

Henchoz and his team had a room set up with their installation AR art! It ran all through the conference. One piece was Ghosts, by Thomas Eberwein & Tim Gfrerer. It has a huge screen showing snowy mountains. When you stand in front of it and wave your arms, it sees you and puts your silhouette into the scene, buried up to your waist in the snow, in a long line with all the other people who had done the same. (In the past hour or two, or since the system restarted—I asked if it stored everyone in there, but it doesn’t.)

They also had Tatooar set up. There was a stack of temporary tattoos, and if you put one on and look at it on the monitor, it got augmented. Here’s my real arm and my augmented arm. It doesn’t show in the still photo, but the auggie was animated and had an eye that opened up.

Finally there was Giovanni Landi, who talked about three projects he’s done with AR and cultural heritage, with AR views of Masaccio’s Holy Trinity fresco (particularly notable for its use of perspective), the Spanish Chapel at the Basilica of Santa Maria Novella in Florence, and also panoramic multispectral imagining of early 1900s Sicilian devotional art. I took very few notes here because his talk was so interesting.

A later session involved Matthew Ramirez, a lead on AR at Mimas in Manchester, where they use AR in higher education. Their showcase has videos of all their best work, and it’s worth a look.

He listed some lessons learned:

- use of AR should be more contextual and linked to the object

- best used in short bite-sized learning chunks

- must deliver unique learning values different from online support

- user should become less conscious of the technology and more engaged with the learning/object

- users learn in different ways and AR may not be appropriate for all students

I chatted with him later and he also said they’ve had much more success working with faculty and courses with lots of students and where the tools and techniques can be adapted for other courses. The small intense boutique projects don’t scale and don’t attract wide interest.

The last one from the day I’ll mention was a talk by Seth Hunter, formerly of the MIT Media Lab and now at Intel, “A New Shared Medium: Designing Social Experiences at a Distance.” He talked about WaaZam, which merges people in different places together into one shared virtual space (a 2.5D world, he called it). Skype lets people talk to each other over video, but everyone’s in their own little box. Little kids find that hard to deal with—he mentioned parents who are away from their children, and how much more engaging and involving and fun it is for the kids when everyone is in the same online space.

“The new shared medium has already been forecasted by artists and hackers,” he said. This was a nice talk about good work for real people.

Hunter was the only person I saw at AWE 2014 to show a GitHub URL! Here it is: https://github.com/arturoc/ofxGstRTP. All the code they’re developing is open and available.

Miskatonic University Press

Miskatonic University Press